Continued Learning

Our research in Continued Learning focuses on developing AI systems that can continuously acquire and refine knowledge over time without forgetting previously learned information. We utilize analytical learning approaches to create methods that efficiently adapt to new data while preserving existing capabilities.

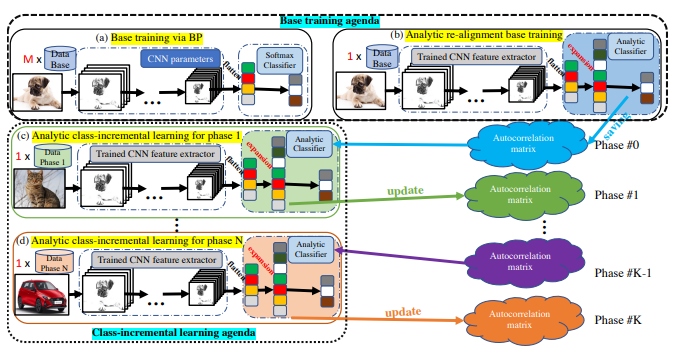

We introduce a novel approach to class-incremental learning (CIL) that addresses the challenges of catastrophic forgetting and data privacy. The proposed Analytic Class-Incremental Learning (ACIL) framework allows for absolute memorization of past knowledge without storing historical data, thereby ensuring data privacy. We theoretically validate that ACIL can achieve results identical to traditional joint-learning methods while only using current data. Empirical results demonstrate that ACIL outperforms existing state-of-the-art methods, particularly in large-phase scenarios (e.g., 25 and 50 phases).

In this paper, we introduce analytic federated learning (AFL), a new training paradigm that brings analytical (i.e., closed-form) solutions to the federated learning (FL) community. Our AFL draws inspiration from analytic learning -- a gradient-free technique that trains neural networks with analytical solutions in one epoch. In the local client training stage, the AFL facilitates a one-epoch training, eliminating the necessity for multi-epoch updates. In the aggregation stage, we derive an absolute aggregation (AA) law. This AA law allows a single-round aggregation, removing the need for multiple aggregation rounds.